1.环境

1.1 jdk

/opt/jdk1.7.0_79

1.2Drill版本

/opt/apache-drill-1.7.0

1.3zookeeper地址

SZB-L0032013:2181,SZB-L0032014:2181,SZB-L0032015:2181

1.3Drill集群服务器

10.20.25.199 SZB-L0032015

10.20.25.241 SZB-L0032016

10.20.25.137 SZB-L0032017

2.Drill集群

2.1 drill-override.conf配置更改

drill.exec: { cluster-id: "szb_drill_cluster", zk.connect: "SZB-L0032013:2181,SZB-L0032014:2181,SZB-L0032015:2181" }

2.2 drill-env.sh添加配置

export HADOOP_HOME="/opt/cloudera/parcels/CDH"

2.3启动集群

各个节点执行该命令: ./drillbit.sh start

1.Drill确认:

[root@SZB-L0032017 bin]# ./drillbit.sh status drillbit is running.

2.Zookeeper配置确认:

./zookeeper-client -server SZB-L0032013:2181

[zk: SZB-L0032013:2181(CONNECTED) 0] ls /

[drill, hive_zookeeper_namespace_hive, hbase, zookeeper]

[zk: SZB-L0032013:2181(CONNECTED) 1] quit

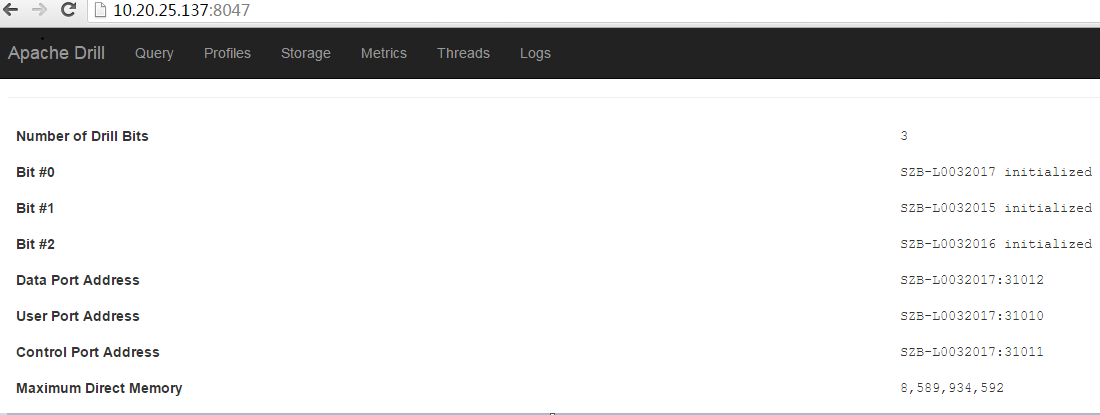

3.Web确认: 可以看出Drill集群3个节点已经配置和启动正常。

2.4查询测试

1.本地文件查询:

select * from dfs.`/opt/apache-drill-1.7.0/sample-data/nation.parquet` LIMIT 20

2.创建hdfs插件并测试:

$ hdfs dfs -mkdir /user/drill

$ hdfs dfs -chown -R root:root /user/drill

$ hdfs dfs -chmod -R 777 /user/drill

$ hdfs dfs -copyFromLocal /opt/apache-drill-1.7.0/sample-data/nation.parquet /user/drill

select * from hdfs.` /user/drill/nation.parquet` LIMIT 20

3.创建hive插件并测试 SELECT * FROM hive.`student`

4.创建hbase插件并测试

SELECT CONVERT_FROM(row_key, 'UTF8') AS id, CONVERT_FROM(student.info.name, 'UTF8') AS name, CAST(student.info.age AS INT) AS age FROM hbase.`student`

说明:因为Hbase数据是基于UTF8编码和二进制数据存储,所以查询的时候进行编码和数据转换操作。

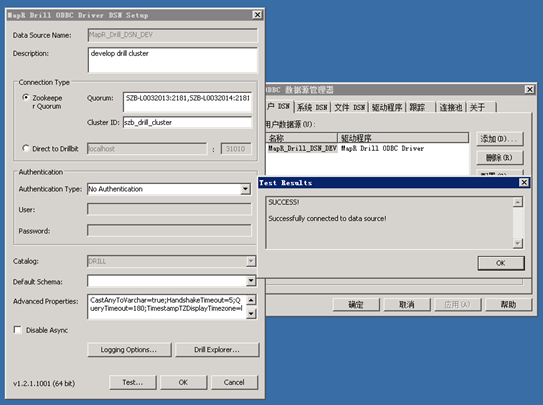

3.Tableau访问

3.1安装MapR提供的ODBC驱动

3.2配置

cluster-id: szb_drill_cluster zk.connect: SZB-L0032013:2181,SZB-L0032014:2181,SZB-L0032015:2181

3.3 Tableau选择其他ODBC链接即可

4.HDFS高可靠的插件配置

4.1插件内容

{

"type": "file",

"enabled": true,

"connection": "hdfs://mycluster",

"config": null,

"workspaces": {

"root": {

"location": "/",

"writable": false,

"defaultInputFormat": null

},

"tmp": {

"location": "/tmp",

"writable": true,

"defaultInputFormat": null

}

},

"formats": {

"psv": {

"type": "text",

"extensions": [

"tbl"

],

"delimiter": "|"

},

"csv": {

"type": "text",

"extensions": [

"csv"

],

"delimiter": ","

},

"tsv": {

"type": "text",

"extensions": [

"tsv"

],

"delimiter": "\t"

},

"parquet": {

"type": "parquet"

},

"json": {

"type": "json",

"extensions": [

"json"

]

},

"avro": {

"type": "avro"

},

"sequencefile": {

"type": "sequencefile",

"extensions": [

"seq"

]

},

"csvh": {

"type": "text",

"extensions": [

"csvh"

],

"extractHeader": true,

"delimiter": ","

}

}

}

4.2hdfs配置文件

[root@HDP03log]# cp hdfs-site.xml /usr/local/apache-drill-1.7.0/conf/

[root@HDP03log]# cp core-site.xml /usr/local/apache-drill-1.7.0/conf/

[root@HDP03log]# cp mapred-site.xml /usr/local/apache-drill-1.7.0/conf/

5.日志聚合, drill-override.conf配置更改

drill.exec: {

cluster-id: "szb_drill_cluster",

zk.connect: "SZB-L0032013:2181,SZB-L0032014:2181,SZB-L0032015:2181",

sys.store.provider.zk.blobroot: "hdfs://SZB-L0032014:8020/user/drill/pstore"

}