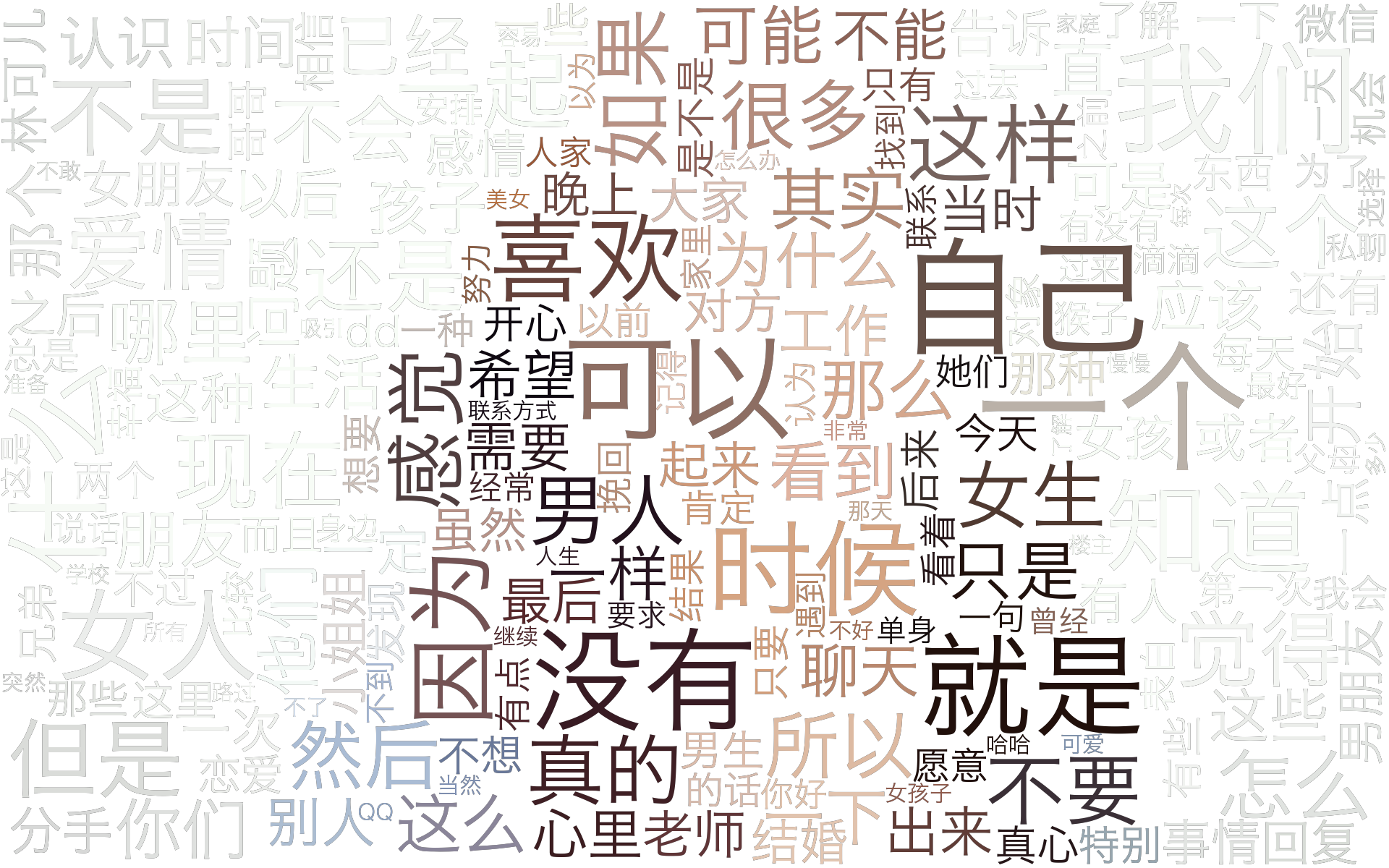

很久没写博客,试着爬了一下百度贴吧的内容,用词云展示下结果

一、爬取内容

想看看大家眼里的爱情怎么样的,于是去逛了下百度的爱情贴吧,把发表的言论都爬下来,代码如下

import re

import csv

import json

import requests

import pandas as pd

import time

from bs4 import BeautifulSoup

from lxml import etree

from fake_useragent import UserAgent

import time

import random

proxy=['112.114.76.176:6668','222.172.239.69:6666','112.114.78.54:6673','121.31.103.33:6666','110.73.30.246:6666','113.121.245.32:6667','114.239.253.38:6666','116.28.106.165:6666','220.179.214.77:6666','110.73.32.7:6666','118.80.181.186:6675','60.211.17.10:6675','110.72.20.245:6673','114.139.48.8:6668','111.124.231.101:6668','110.73.33.207:6673','113.122.42.161:6675','122.89.138.20:6675','61.138.104.30:1080','121.31.199.91:6675','218.56.132.156:8080','218.56.132.156:8080','220.249.185.178:9999','60.190.96.190:808','121.31.196.109:8123','121.31.196.109:8123','61.135.217.7:80','61.135.217.7:80','61.155.164.109:3128','61.155.164.109:3128','61.155.164.110:3128','124.89.33.75:9999','124.89.33.75:9999','113.200.214.164:9999','113.200.214.164:9999','119.90.63.3:3128','112.250.65.222:53281','112.250.65.222:53281','222.222.169.60:53281','122.136.212.132:53281','122.136.212.132:53281','58.243.50.184:53281','58.243.50.184:53281','125.66.140.27:53281','125.66.140.27:53281','139.224.24.26:8888','139.224.24.26:8888']

address_list=[]

for i in range(0,1050,50): #爬取20页的贴吧,1048个帖子

url='https://tieba.baidu.com/f?kw=%E7%88%B1%E6%83%85&ie=utf-8&pn='+str(i)

soup=BeautifulSoup(requests.get(url).text, 'html.parser') #用bs4解析网页

div_tags=soup.find_all('a',{'class':'j_th_tit'}) #获取当前页面每个贴的链接

for j in div_tags:

address_list.append((j.attrs)['href'])

print(len(address_list))

describe=[]

for i in range(len(address_list)):

new_proxy={'http':random.choice(proxy)}

print(i)

url_second='https://tieba.baidu.com'+address_list[i]

soup=BeautifulSoup(requests.get(url_second,proxies=new_proxy).text, 'html.parser')

div_tags=soup.find_all('div',{'class':'d_post_content j_d_post_content'}) #每个人发表的内容

for i in div_tags:

describe.append(''.join(i.text.split()))#或者用正则 re.compile(r'\s+').sub('',i.text)

describe

二、词云

用jieba分词处理下数据,然后用图片展示下结果

from wordcloud import WordCloud, ImageColorGenerator

import matplotlib.pyplot as plt

import jieba

import numpy as np

from PIL import Image

text = ' '.join(jieba.cut(''.join(describe)))

font = r'C:\Windows\Fonts\Hiragino.ttf'

mask = np.array(Image.open("XYJY.png"))

wc = WordCloud(mask=mask,font_path=font, mode='RGBA', background_color=None).generate(text)

image_colors = ImageColorGenerator(mask)

wc.recolor(color_func=image_colors)

# 显示词云

plt.imshow(wc)

plt.axis('off')

plt.show()

wc.to_file('wordcloud.png')

总结:目前只能爬取发表的内容,其他人的回复暂时没搞定,后续研究下

更详细代码在github:https://github.com/Cherishsword/Love-bar