环境win7 Python 3.6

工具PyCharm2017

直接命令 pip install cx_Oracle

导入cx_Oracle

import cx_Oracle

1.Python连接数据库 写法有多种 百度很多

try:

tns = cx_Oracle.makedsn('localhost','1521','ORABI')

db = cx_Oracle.connect('username','password',tns)

print('连接成功...')

except Exception as e:

print(e)

print('连接失败...')

2.建立游标cursor 并执行 查询,建表,插入,删除

创建游标 执行SQL 并提交 关闭游标 关闭连接

建表

cr = db.cursor()

sql = ("CREATE TABLE tb_products("

"titles char(100),"

"shops char(30),"

"deals char(20),"

"prices char(10),"

"locations char(50))")

cr.execute(sql)

db.commit()

cr.close()

db.close()

查询数据

cr = db.cursor()

sql = 'select * from GB_USERINFO'

cr.execute(sql)

list = cr.fetchall()

for i in list:

print(i)

cr.close()

db.close()

插入数据

cr = db.cursor()

sql = ("insert into tb_products(TITLES,SHOPS,DEALS,PRICES,LOCATIONS) VALUES(:1,:2,:3,:4,:5)")

其中这边需要注意 网上大多都是关于写入MYSQL数据库 VALUES后面所带参数为%s 但是这样写Oracle会报

ora-01036: 非法的变量名/编号 错误 所以这边应为 :1 ,:2

result 可接收爬取值传入values后面的参数内

cr.execute(sql, result)

db.commit()

其中爬取数据中若带有特殊字符也应处理掉,否则可能会出现转码错误

贴上完整代码 爬取代码参考天善崔庆才大神的

# coding=utf-8

import cx_Oracle

from selenium import webdriver

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

from config import *

from urllib.parse import quote

from pyquery import PyQuery as pq

browser = webdriver.Chrome()

try:

tns = cx_Oracle.makedsn('localhost','1521','ORABI')

db = cx_Oracle.connect('username','password',tns)

print('连接成功...')

except Exception as e:

print(e)

print('连接失败...')

# browser = webdriver.PhantomJS(service_args=SERVICE_ARGS)

'''

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--headless')

browser = webdriver.Chrome(chrome_options=chrome_options)

'''

wait = WebDriverWait(browser, 10)

def index_page(page):

"""

抓取索引页

:param page: 页码

"""

print('正在爬取第', page, '页')

try:

url = 'https://s.taobao.com/search?q=' + quote(KEYWORD)

browser.get(url)

if page > 1:

input = wait.until(

EC.presence_of_element_located((By.CSS_SELECTOR, '#mainsrp-pager div.form > input')))

submit = wait.until(

EC.element_to_be_clickable((By.CSS_SELECTOR, '#mainsrp-pager div.form > span.btn.J_Submit')))

input.clear()

input.send_keys(page) #send_keys() 中 k 是小写

submit.click()

wait.until(

EC.text_to_be_present_in_element((By.CSS_SELECTOR, '#mainsrp-pager li.item.active > span'), str(page)))

wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, '.m-itemlist .items .item')))

get_product()

except TimeoutException:

index_page(page)

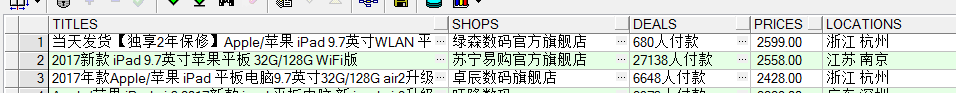

def get_product():

"""

抓取产品数据

"""

html = browser.page_source

doc = pq(html)

items = doc('#mainsrp-itemlist .items .item').items()

for item in items:

product = (

item.find('.title').text(),

item.find('.shop').text(),

item.find('.deal-cnt').text(),

item.find('.price').text().replace('¥',''),

item.find('.location').text()

)

print(product)

save_to_oracle(product)

def save_to_oracle(result):

"""

把抓取的数据写入数据库

:param result:

:return:

"""

cr = db.cursor()

sql = ("insert into tb_products(TITLES,SHOPS,DEALS,PRICES,LOCATIONS) VALUES(:1,:2,:3,:4,:5)")

try:

cr.execute(sql, result)

db.commit()

print('保存成功!')

except Exception as e:

print(e)

print('保存失败...')

#创建表

def create_table():

"""

在数据库中创建表tb_products

:return:

"""

cr = db.cursor()

sql = ("CREATE TABLE tb_products("

"titles char(100),"

"shops char(30),"

"deals char(20),"

"prices char(10),"

"locations char(50))")

try:

cr.execute(sql)

db.commit()

print('建表成功!')

except:

print('建表失败...')

#查询

def selectsql():

"""

测试查询表数据

:return:

"""

cr = db.cursor()

sql = 'select * from GB_USERINFO'

cr.execute(sql)

list = cr.fetchall()

for i in list:

print(i)

cr.close()

#

def main():

#create_table()

for i in range(1, MAX_PAGE + 1):

index_page(i)

db.close()

if __name__ == '__main__':

main()

config.py内代码

MAX_PAGE = 100

KEYWORD = 'ipad'